Code Review

Code review sessions are where VibeRails orchestrates AI models to analyse your codebase, discover bugs, security vulnerabilities, and code quality issues. Each session produces a structured list of findings that you can triage, tag, and fix.

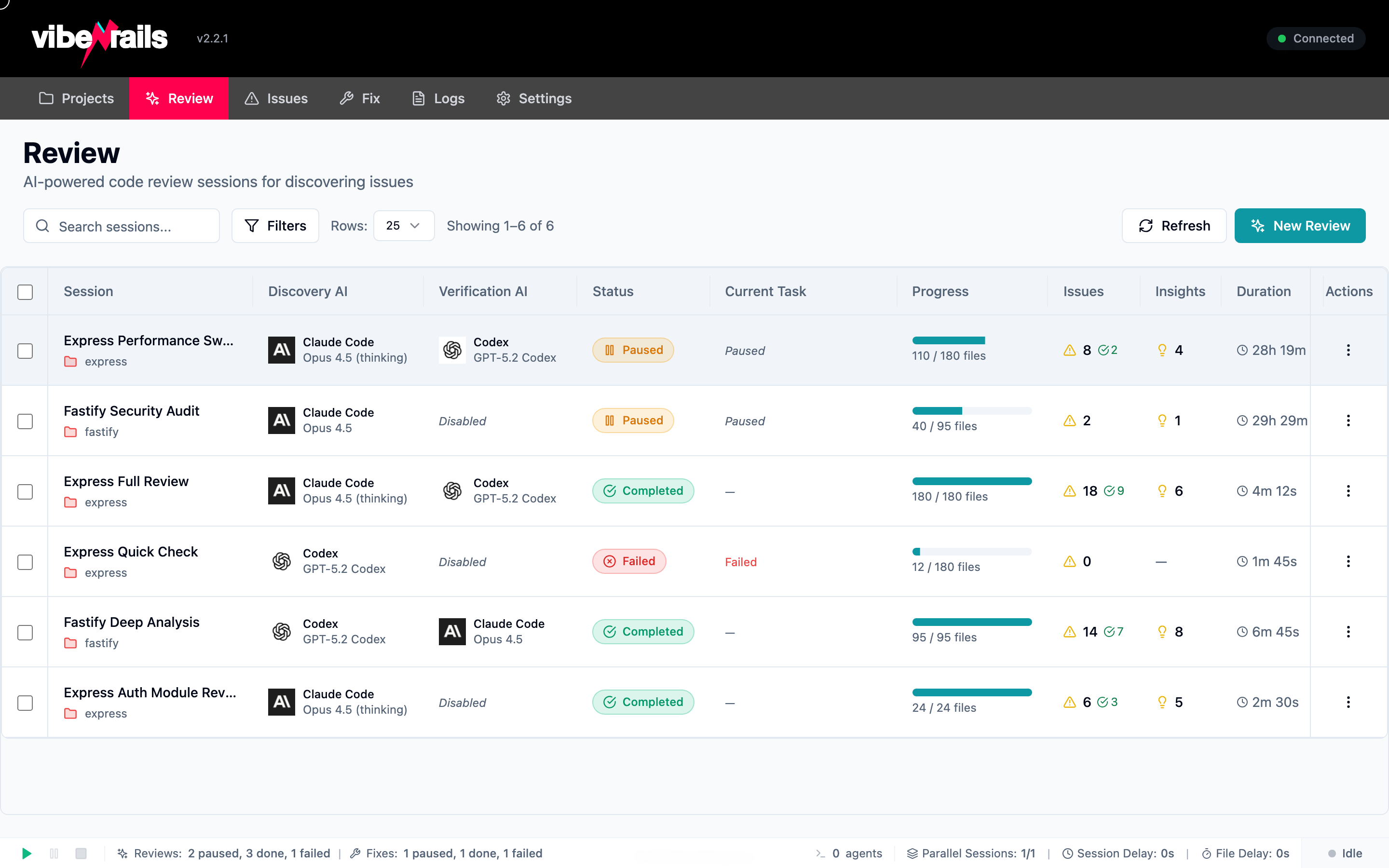

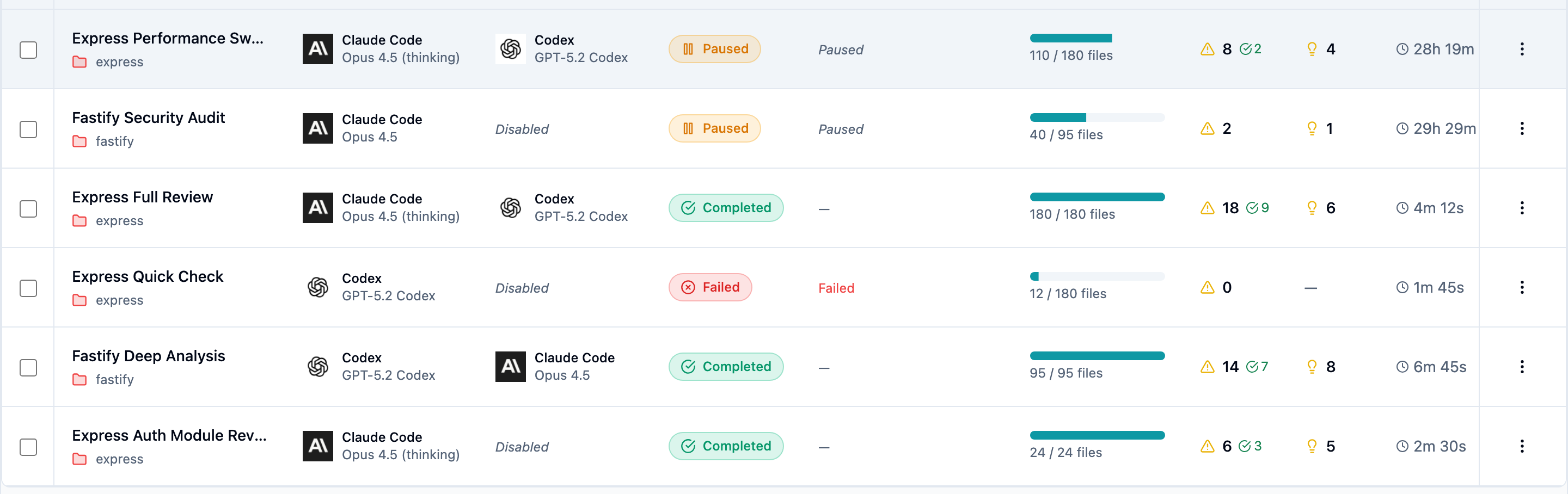

Sessions Overview

The Code Review screen shows all review sessions for the active project. Each row displays the session name, the AI model used, the number of issues found, the session status, and when it was created.

Click any row to open the session detail view, which shows the full list of discovered issues along with a summary of findings by severity and category.

AI Model Configuration

VibeRails supports multiple AI backends for code review. When creating a session, you choose which model to use. The model cell in the sessions table shows the provider icon and model name at a glance.

Available models include:

- Claude Code (Anthropic) — deep reasoning with strong security analysis capabilities.

- Codex (OpenAI) — fast analysis optimised for common code patterns.

Status Types

Each review session moves through a defined lifecycle. Status badges in the table make it easy to see where every session stands:

- Queued — the session is waiting to start.

- Scanning — VibeRails is indexing the project files and sending them to the AI model.

- Reviewing — the AI model is actively analysing the code.

- Verifying — a second-pass verification is running to confirm findings.

- Complete — the session has finished and all issues are available.

- Failed — the session encountered an error (check the session detail for diagnostics).

Creating a Review Session

Click New Review to launch the creation wizard. The wizard guides you through three steps:

- Scope — choose which files or directories to include. By default, the entire project is reviewed.

- Model & Prompt — select the AI model and optionally customise the review prompt.

- Confirm — review the session summary and estimated cost, then start the review.

Verification Pass

After the initial review completes, VibeRails optionally runs a verification pass. During verification, a second AI model (or the same model with a different prompt) re-examines each finding to confirm it is genuine and not a false positive.

Verified issues receive a confidence score. Issues that cannot be verified are flagged for manual review. This two-pass approach significantly reduces noise in your issue list.

Session Details

Click any completed session to open the detail dialog. This view shows:

- A summary panel with issue counts broken down by severity and category.

- A timeline of the session lifecycle (queued, scanning, reviewing, verifying, complete).

- The full issue list with inline code snippets and severity badges.

- Session metadata including model, prompt, file scope, and token usage.

From the detail view you can jump directly into Triage Mode to review findings one by one, or select issues to send to a Fix Session.